To build an ETL pipeline with batch processing, you need to: It’s challenging to build an enterprise ETL workflow from scratch, so you typically rely on ETL tools such as Stitch or Blendo, which simplify and automate much of the process. In a traditional ETL pipeline, you process data in batches from source databases to a data warehouse.

Building an ETL Pipeline with Batch Processing

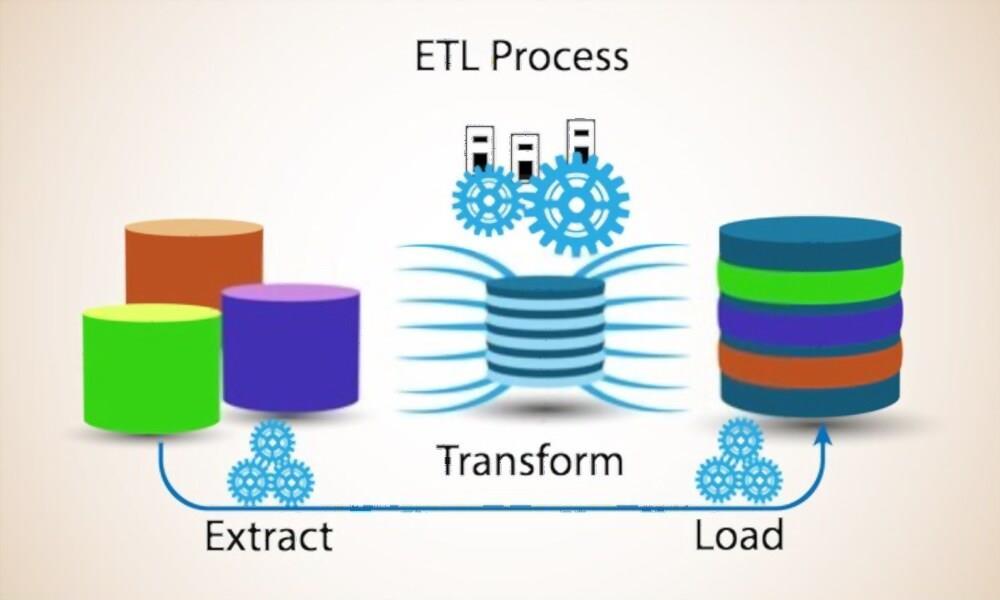

ETL PROCESSES AMAZON JOB HOW TO

Let’s start by looking at how to do this the traditional way: batch processing. This process is complicated and time-consuming. Then you must carefully plan and test to ensure you transform the data correctly. When you build an ETL infrastructure, you must first integrate data from a variety of sources. ETL typically summarizes data to reduce its size and improve performance for specific types of analysis. What is ETL (Extract Transform Load)?ĮTL (Extract, Transform, Load) is an automated process which takes raw data, extracts the information required for analysis, transforms it into a format that can serve business needs, and loads it to a data warehouse. For the former, we’ll use Kafka, and for the latter, we’ll use Panoply’s data management platform.īut first, let’s give you a benchmark to work with: the conventional and cumbersome Extract Transform Load process. The other is automated data management that bypasses traditional ETL and uses the Extract, Load, Transform (ELT) paradigm. One such method is stream processing that lets you deal with real-time data on the fly. Well, wish no longer! In this article, we’ll show you how to implement two of the most cutting-edge data management techniques that provide huge time, money, and efficiency gains over the traditional Extract, Transform, Load model. You need control over Infra & It's tuning parametersĪlso going back to OP's use case of 1TB of data processing.3 Ways to Build An ETL Process with ExamplesĪre you stuck in the past? Are you still using the slow and old-fashioned Extract, Transform, Load (ETL) paradigm to process data? Do you wish there were more straightforward and faster methods out there? You Need a Hadoop Ecosystem & Related tools (like HDFS, HIVE, HUE, Impala etc)ĥ. Attractive option for 24/7 Spark Streaming Programsģ. Use Case is of Volatile Clusters - Mostly Used for Batch Processing (Day MINUS Scenarios) - Thereby making a costs effective solution for Batch JobsĢ. Short RealTime Streaming Jobs which need to run for let's say hrs during a dayġ. Batch Setups wherein the Job might complete in fixed timeģ. You are not worried about Costs but need highly resilient infraĢ. (Though the System Recovers Automatically -ĭevOps to Setup EMR & Manage, Intermediate Knowledge of Orchestration via Cloud Watch & Step Function, PySparkġ. If Spot Instances are used then interruption might occur with 2 min notification Identify the Type of Node Needed & Setup Autoscaling rules etc

Through Cloud Watch Triggers & Step Functions Much Cheaper (Due to Spot Instance Functionality, There have been cases when there are saving of upto 50% over top-off glue costs - even more depending upon the use case) In case of structured data, you should use EMR when you want more Hadoop capabilities like hive, presto for further analytics.You can manage the cluster easily or if you have so many jobs which can run concurrently on the cluster saving you money.You need to define more memory per executor depending upon the type of your job and requirement.You believe only in the outputs and lineage is not required.Data is huge but semi-structured or unstructured where you can't take any benefit from Glue catalog.You don't want the overhead of managing large cluster and pay only for what you use.The developers don't need to tweak the performance parameters like setting number of executors, per executor memory and so on.Lineage is required, if you need the data lineage graph while developing your etl job prefer developing the etl using glue native libraries.it is in the table structure and is of known format (CSV, parquet, orc, json). Most of the differences are already listed so I'll focus more on the use case specific.

0 kommentar(er)

0 kommentar(er)